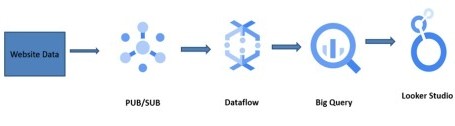

Designing a Streaming Data Pipeline with DataFlow and Visualization Dashboard Using Looker

motivitylabs

September 27, 2023

Designing a Streaming Data Pipeline with DataFlow and Visualization Dashboard Using Looker